Long time ago when I was a kid I used to play this video game that I really like... I'm not going to name it because I'm not sure if anyone still cares for this game, but it was released in 2000 for Windows 98 and I think there were rumors for a PlayStation1 release, but that never happened. The game is currently sold on GoG, Steam, and probably a few other platforms. It runs on Windows and Linux thanks to Steam's Proton. Though I hear GoG version runs on plain Wine as well.

Well damn it, I was not satisfied that I couldn't run this 25 year old game at my 4K resolution and 144Hz. So like any self-respecting masochistic ignorant nerd I said "Sure, I can remake this in Rust". This is how my journey stared. According to Ghidra, the game is ~260K source lines of code when decompiled. I'm not "dreaming" to remake this game in Rust, but loading all of the assets in Bevy actually helped the reverse engineering efforts, so so far that's where I'm heading. Information in this blog post isn't new (shoutout to FringeSpace folks for advising and moral support). However a lot of the reverse engineering efforts have been ad-hoc and it sounds like on average I have already caught-up with what has been recovered in the past 25 years. I wanted to attract/teach people reverse engineering games for modding/preservation and also a single-place repository for information about this specific game's file structures, so that's why I'm making these blog post series.

You don't need to know reverse engineering, ASM, ghidra, or imhex to enjoy this blog post, but you need to know your C.

So let's dive right into it. This post's goal is to figure out how the game stores its asssets, whatever those are. I downloaded the game through Steam and look at the files:

The command just sorts files by size and removes dlls from view. The big outlier is Tachyon.pff taking the majority of the folder's size - 365MB. That's likely our target. I tried running common file ID tools against it and searching online, and while I did find the FringeSpace community who already RE'd the file - the consensus was that the PFF file structure was internal to the company that developed this game. So What can we do? Well the game reads the file... so it should have code inside of it that reads the file... let's open it in ghidra and see what happens.

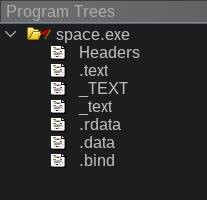

I should note that I made a mistake already - Tachyon.exe is a small intro app that lets you browse the authors' website, look for updates, and configure the game before running it. This isn't the actual game, which is the 1.8MB space.exe executable that you see in the file listing above. However this mistake was fruitful at the end - the main game binary is obfuscated while this intro app isn't. At the same time - both the intro app and the main game load Tachyon.pff file - so we're in luck!

When you open Tachyon.pff in Ghidra it presents you with this view. I'd like to note that my view isn't quite default - I adjusted the color theme and added a few windows I find useful at the bottom. All of these can be added from the Window pulldown menu. Quick note about Jython - it's python with ghidra's java bindings. It can be useful for quick scripting, but I find the console presence to be really nice for quick dec->hex->bin conversion.

So at this point there are several things we can do. Before I dive into what I did - let's think for a second what is Reverse Engineering? I like to think of RE as pumping context into math, and you are the pump. Context being this abstract multidimensional latent space that you know. Ok so what do you know about this game? What do I know about it? Well, I know I want to find how to read tachyon.pff file. So I can search for strings and see if one of them is tachyon.pff. I also know that the only way to open files is to ask Windows to open them for you - so we can look for variations of the open() function.

When searching for strings - I was not able to find the whole word - tachyon.pff in the file. Bummer. But I was able to find these really interesting strings - this has to be used somewhere where I need to look, right?

If we click on one of the strings - the listing view will take us to it. From there we can see that Ghidra found exactly one function that references this address (the XREF label) - click on that function and you'll see:

If we click on one of the strings - the listing view will take us to it. From there we can see that Ghidra found exactly one function that references this address (the XREF label) - click on that function and you'll see:

byte * FUN_0040b0a0(uint *param_1,undefined4 *param_2)

{

bool bVar1;

undefined3 extraout_var;

byte *pbVar2;

UINT UVar3;

bVar1 = FUN_0040aec0(param_1,param_2);

if (CONCAT31(extraout_var,bVar1) == 0) {

param_2[0x2d] = 1;

pbVar2 = NULL;

}

else {

UVar3 = FUN_0040afb0(0,2,param_2);

FUN_0040afb0(0,0,param_2);

param_2[0x2c] = UVar3;

DAT_0047fb44 = UVar3;

pbVar2 = (byte *)FUN_004063a0(UVar3,4,0,0x42e578);

FUN_0040af60(pbVar2,UVar3,param_2);

if ((*(uint *)param_2[0x27] & 1) != 0) {

FUN_0040b060(pbVar2,UVar3);

}

param_2[0x2d] = 0;

}

return pbVar2;

}

Ok, where is that string?

Unfortunately the analysis didn't propagate the fact that this data is a human-readable text to the decompiler. Let's look back to where the string is stored - it's at 0x42e578... Oh look the code lists that as a fourth argument to this other function call - FUN_004063a0. We can right click on it and change parameter definitions:

Ok, so looking at the code now, we call FUN_0040aec0, then if that variable is 0, we return null, otherwise we do something and call a function that says "PFF LOADED FILE" ok, that sounds like we're in the right place.

Remember how I mentioned that reverse engineering is pumping context into math? Well, we are looking at some function and we just got a bit of context - it loads a pff file successfully. Now we need to nudge context from random directions until we bring enough of it to figure out what this function does. I like strings. Human readable strings is where a lot of context lives for us. During my first run-in with this file I went the manual way. I looked at this function, looked at other functions nearby, looped for KERNEL32.DLL::_lopen() function and see who called that... Eventually I brought enough context to figure this function out. However I also developed a few scripts to help me along the way. One of them is modification of ghidra's standard recursive string finder, however I modified it slightly - It now prints not only strings, but function names and static label names that don't start with FUN_ or DAT_ or LAB_ - essentially everything that I manually named already. Let's run that script on this function:

CALL FUN_0040b0a0 ()

@0040b12d - CALL FUN_0040af60 ()

@0040af7c - CALL FUN_00407210 ()

@00407228 - SYMBOL: PTR__lread_0042e0b8

@0040b109 - SYMBOL: s_PFF_LOADED_FILE_0042e578

@0040b116 - CALL FUN_004063a0 ()

@004063c1 - ds "mem_GetMemEx(): %ld bytes ('%s')\n"

@00406501 - SYMBOL: s_mem_GetMemEx():_Failed_to_alloca_0042e3ac

@00406501 - ds "mem_GetMemEx(): Failed to allocate %ld bytes ('%s')"

@004063de - SYMBOL: PTR_DAT_00430f54

@004063cb - CALL FUN_0040fc1a ()

@0040fc43 - CALL FUN_00411a1e ()

@00411dc8 - SYMBOL: PTR_FUN_00431380

@00411fe2 - CALL __aulldiv ()

@00411e87 - SYMBOL: s_null)_0042e78d

@00411e00 - SYMBOL: PTR_FUN_00431384

@00411e1e - CALL _strlen ()

@00412078 - CALL FUN_00412194 ()

@004121ae - CALL FUN_0041215f ()

@0041217c - CALL FUN_00411909 ()

@00411975 - CALL FUN_004153c8 ()

@004153d3 - CALL _malloc ()

@0041199e - CALL FUN_00414b2f ()

@00414c54 - SYMBOL: PTR_GetLastError_0042e0e0

@00414b88 - CALL FUN_00414a95 ()

@00414af1 - SYMBOL: PTR_GetLastError_0042e0e0

@00414ae4 - SYMBOL: PTR_SetFilePointer_0042e0a8

@00414c07 - SYMBOL: PTR_WriteFile_0042e168

@00411c77 - SYMBOL: PTR_DAT_0043139c

@00411a8c - SYMBOL: switchdataD_0041213f

@00411cf2 - CALL FUN_004154eb ()

@00415534 - SYMBOL: PTR_WideCharToMultiByte_0042e14c

@00411c94 - SYMBOL: u_null)_0042e77e

@00411bbd - SYMBOL: PTR_DAT_00431150

@00411e87 - ds "null)"

@00411de9 - SYMBOL: PTR_FUN_0043138c

@00411d82 - SYMBOL: PTR_DAT_00431398

@00411fd0 - CALL __aullrem ()

@004063d8 - SYMBOL: PTR_OutputDebugStringA_0042e0cc

@004063c1 - SYMBOL: s_mem_GetMemEx():_%ld_bytes_('%s')_0042e3e0

@0040b0b1 - CALL FUN_0040aec0 ()

@0040aed1 - CALL FUN_0040ade0 ()

@0040ae09 - CALL FUN_00416a2c ()

@00416a73 - CALL FUN_004122fc ()

@0041230b - SYMBOL: ExceptionList

@0041235a - SYMBOL: PTR_LCMapStringA_0042e140

@0041233e - SYMBOL: PTR_LCMapStringW_0042e13c

@004123db - SYMBOL: PTR_MultiByteToWideChar_0042e144

@00412509 - SYMBOL: PTR_WideCharToMultiByte_0042e14c

@00416abc - CALL FUN_0040fe1b ()

@0040fe42 - SYMBOL: PTR_HeapFree_0042e174

@0040fe31 - CALL FUN_004130a1 ()

@004132e0 - SYMBOL: PTR_VirtualFree_0042e130

@0041338f - CALL FUN_00410090 ()

@00410247 - SYMBOL: switchdataD_00410370

@004100c5 - SYMBOL: switchdataD_004101d8

@00410252 - SYMBOL: PTR_caseD_0_00410320

@004100dd - SYMBOL: switchdataD_004100f4

@004100ec - SYMBOL: switchdataD_0041016c

@0041026d - SYMBOL: switchdataD_0041027c

@00413365 - SYMBOL: PTR_HeapFree_0042e174

@004132f8 - CALL VirtualFree ()

@00416a82 - CALL _malloc ()

@0040aef6 - CALL FUN_0040b220 ()

@0040b268 - SYMBOL: PTR_MessageBoxA_0042e204

@0040b258 - SYMBOL: s_pffmgr_0042e588

@0040b258 - ds "pffmgr"

@0040b245 - ds "Error reading %s in PFF file %s."

@0040b245 - SYMBOL: s_Error_reading_%s_in_PFF_file_%s._0042e590

@0040af29 - CALL FUN_00407260 ()

@00407278 - SYMBOL: PTR__llseek_0042e0bc

@0040b109 - ds "PFF LOADED FILE"

Oh look at that - devs were kind enough to even leave us function names in the log strings. So FUN_004063a0 is "mem_GetMemEx", FUN_0040fe1b frees something on the heap, FUN_00407260 is a wrapper for _llseek, FUN_0040b220 shows a MessageBoxA with an error message - so that's definitely an error handler of sorts - even more - FUN_0040fc1a accepts a format string as a SECOND argument - I bet that's fprintf! So let's spend some time renaming nearby functions that we can figure out. Eventually we get:

byte * FUN_0040b0a0(uint *param_1,undefined4 *param_2)

{

bool bVar1;

undefined3 extraout_var;

byte *pbVar2;

UINT UVar3;

bVar1 = FUN_0040aec0(param_1,param_2);

if (CONCAT31(extraout_var,bVar1) == 0) {

param_2[0x2d] = 1;

pbVar2 = NULL;

}

else {

UVar3 = seek_file(0,2,param_2);

seek_file(0,0,param_2);

param_2[0x2c] = UVar3;

DAT_0047fb44 = UVar3;

pbVar2 = (byte *)mem_GetMemEx(UVar3,4,0,"PFF LOADED FILE");

read_file(pbVar2,UVar3,param_2);

if ((*(uint *)param_2[0x27] & 1) != 0) {

FUN_0040b060(pbVar2,UVar3);

}

param_2[0x2d] = 0;

}

return pbVar2;

}

Oh wow that looks... Quite reasonable! And all I did was rename some functions based on what other strings or function calls they had that I knew about. Neat! Ok, so I'm guessing here but it looks like FUN_0040aec0 maybe reads the file? Over all param_2 must be a FILE *. Now what's this odd check after we read the file..?

void FUN_0040b060(byte *param_1,int param_2)

{

uint uVar1;

if ((param_1 != NULL) && (param_2 != 0)) {

uVar1 = 0x312a4ce;

do {

uVar1 = uVar1 << 7 | uVar1 >> 0x19;

*param_1 = *param_1 ^ (byte)uVar1;

param_1 = param_1 + 1;

param_2 = param_2 + -1;

} while (param_2 != 0);

}

return;

}

Bit operations? That's odd. XOR? If your spidey-senses aren't tingling yet - don't fret. The year is 2025. This function calls no other functions, and doesn't de-reference anything - it's a perfect contender for what I call "vibe decoding".

Nice! You can read more about what it is but essentially it's a function that can decrypt or encrypt data. Run encrypted data through it - and you get plaintext. Run plaintext through it - and you get encrypted data. Though "encrypted" is weak by modern standards. But hint hint - looking on online forums you'll find references that the game's files are encrypted. And conveniently the decryption key is right there in uVar1. At this point I spent some time going from system calls and checking what kinds of data they accept to propagate everything. Eventually our target function FUN_40b0a0 will look like this:

void * read_resource(char *archive_filename,PFF_STRUCT *pff_data)

{

uint is_found;

void *buffer;

uint data_size;

is_found = find_archived_resource_and_seek_file_to_it(archive_filename,pff_data);

if (is_found == 0) {

pff_data->is_error = 1;

buffer = NULL;

}

else {

// mode == 2 is seek to end of logical region

data_size = seek_to_found_entry_start(0,2,pff_data);

// mode == 0 is from beginning of the file.

seek_to_found_entry_start(0,0,pff_data);

pff_data->current_entry_data_size = data_size;

GAME_DATA.CURRENT_ENTRY_DATA_SIZE = data_size;

buffer = allocateTaggedBlock(data_size,4,0,"PFF LOADED FILE");

read_partial_resource(buffer,data_size,pff_data);

if ((pff_data->last_found_entry->is_encrypted & 1) != 0) {

encodeBlockWithRollingXor(buffer,data_size);

}

pff_data->is_error = 0;

}

return buffer;

}

I asked AI to help several times more during this effort - this "allocatedTaggedBlock" function is part of custom-implemented memory management engine that's found all throughout the game. You'll also notice that only one other function calls this function. That function also has a nice little error string inside of it which names it - FUN_407960 is called "file_LoadFileEx". From there I spend some more time marking nearby functions. It turns out that main pff file name isn't stored in the binary - it's loaded from a sort of config file called front.cfg. But overall, the read_resource function has everything you need to read the PFF file. Here's the resulting pff extractor:

use std::{fs::File, io::{self, BufRead, Cursor, Read, Seek}, path::{Path, PathBuf}};

use binread::{BinRead, BinReaderExt};

#[derive(BinRead, Debug)]

struct PffHeader {

header_size:u32,

magic:[char;4],

entry_count:u32,

entry_size:u32,

entry_start_offset:u32,

}

struct PffEntry {

is_encrypted:u32,

data_offset:u32,

data_size:u32,

d:u32,

name:[u8;0x10],

e:u32

}

fn tachyon_decrypt(buffer:&mut [u8], mut key:u32) {

for index in 0..buffer.len() {

key = key << 7 | key >> 0x19;

buffer[index] ^= key as u8;

}

}

fn read(path:&Path) -> io::Result<()> {

let mut pff = std::fs::File::open(path)?;

let h: PffHeader = pff.read_ne().unwrap();

if h.magic != ['P', 'F', 'F', '3'] {

Err(io::Error::new(io::ErrorKind::InvalidData, "Not a valid PFF3 file."))

} else {

for entry_id in 0..h.entry_count {

pff.seek(io::SeekFrom::Start((h.entry_start_offset + entry_id * h.entry_size) as u64))?;

let entry:PffEntry = pff.read_ne().unwrap();

let filename = bytes_to_string(&entry.name)?.to_ascii_lowercase();

pff.seek(io::SeekFrom::Start(entry.data_offset))?;

let mut buffer = vec![0;entry.data_size];

pff.read_exact(&mut buffer)?;

let mut f = std::fs::File::create(format!("extracted/{filename}")).expect("Unable to create file");

io::Write::write_all(&mut f, &buffer).expect("Unable to save file to disk");

}

}

}

If we click on one of the strings - the listing view will take us to it. From there we can see that Ghidra found exactly one function that references this address (the XREF label) - click on that function and you'll see:

If we click on one of the strings - the listing view will take us to it. From there we can see that Ghidra found exactly one function that references this address (the XREF label) - click on that function and you'll see: